Why Warmup the Learning Rate?

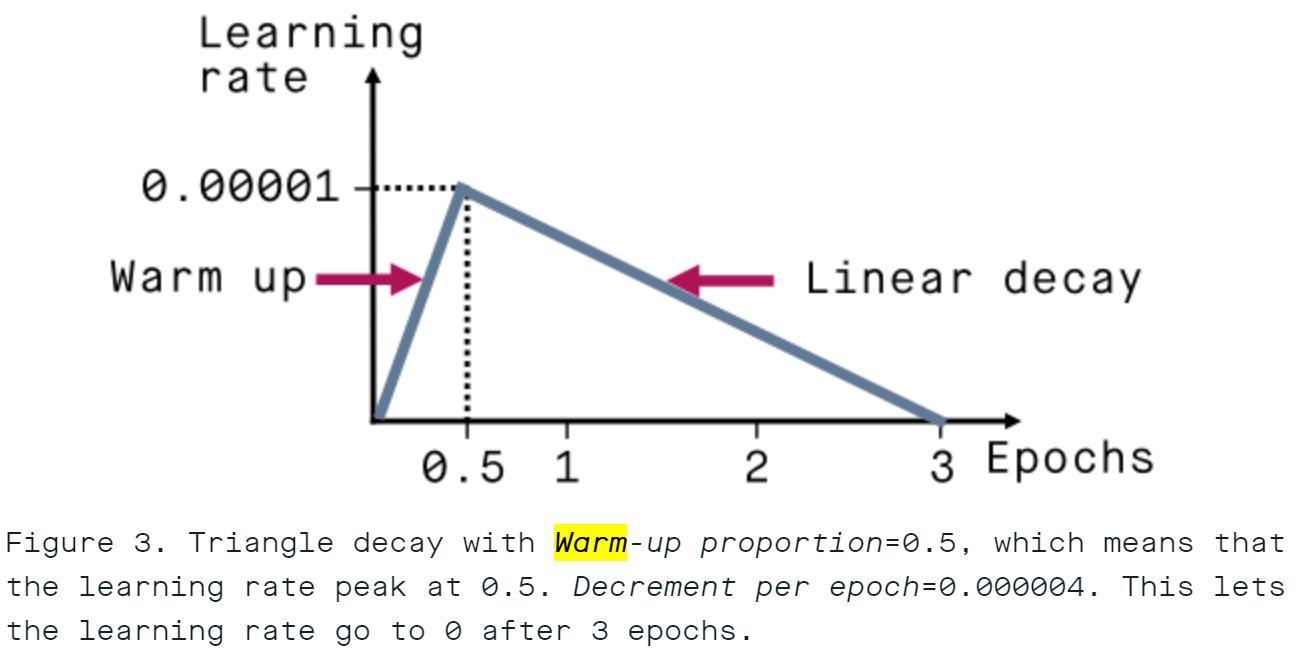

Why Do We Use Learning Rate Warm-Up in Deep Learning? Training deep neural networks is notoriously sensitive to hyperparameters, especially the learning rate. One widely adopted technique to improve stability and performance is learning rate warm-up. But why does warm-up help, what exactly does it do and the effect of Warm-up duration and how does it behave with different optimizers like SGD and Adam? What Is Learning Rate Warm-Up? Learning rate warm-up is a simple technique where the learning rate starts small and gradually increases to a target value over a few iterations or epochs. ...