CourseTA - AI Teaching Assistant

CourseTA is an Agentic AI-powered teaching assistant that helps educators process educational content, generate questions, create summaries, and build Q&A systems.

Features

- File Upload: Upload PDF documents or audio/video files for automatic text extraction

- Question Generation: Create True/False or Multiple Choice questions from your content

- Content Summarization: Extract main points and generate comprehensive summaries

- Question Answering: Ask questions and get answers specific to your uploaded content

Demo

Requirements

- Python 3.9+

- Dependencies listed in

requirements.txt - FFmpeg (for audio/video processing)

- Ollama (optional, for local LLM support)

Installation

Clone this repository:

1 2https://github.com/Sh-31/CourseTA.git cd CourseTAInstall FFmpeg:

Linux (Ubuntu/Debian):

1 2sudo apt update sudo apt install ffmpegInstall the required Python packages:

1pip install -r requirements.txt(Optional) Install Ollama for local LLM support:

Windows/macOS/Linux:

- Download and install from https://ollama.ai/

- Or use the installation script:

1curl -fsSL https://ollama.ai/install.sh | shPull the recommended model:

1ollama pull qwen3:4bSet up your environment variables (API keys, etc.) in a

.envfile.Update

.envwith your credentials:1cp .env.example .env

Usage

Running the Application

Start the FastAPI backend:

1python main.pyIn a separate terminal, start the Gradio UI:

1python gradio_ui.py

Architecture

CourseTA uses a microservice architecture with agent-based workflows:

- FastAPI backend for API endpoints

- LangChain-based processing pipelines with multi-agent workflows

- LangGraph for LLM orchestration

Agent Graph Architecture

CourseTA implements three main agent graphs, each designed with specific nodes, loops, and reflection mechanisms:

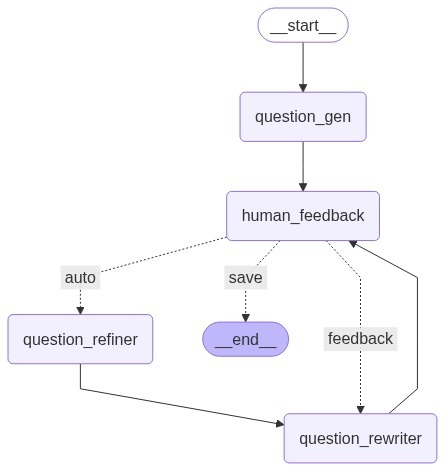

1. Question Generation Graph

The Question Generation agent follows a human-in-the-loop pattern with reflection capabilities:

Nodes:

- QuestionGenerator: Initial question creation from content

- HumanFeedback: Human interaction node with interrupt mechanism

- Router: Decision node that routes based on feedback type

- QuestionRefiner: Automatic refinement using AI feedback

- QuestionRewriter: Manual refinement based on human feedback

Flow:

- Starts with question generation

- Enters human feedback loop with interrupt

- Router decides:

save(END),auto(refiner), orfeedback(rewriter) - Both refiner and rewriter loop back to human feedback for continuous improvement

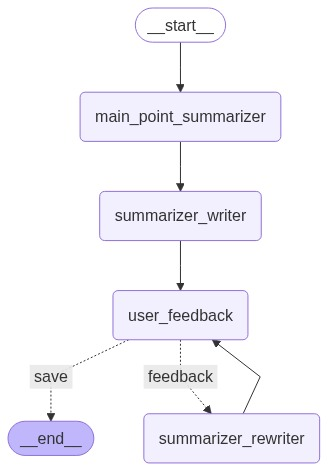

2. Content Summarization Graph

The Summarization agent uses a two-stage approach with iterative refinement:

Nodes:

- SummarizerMainPointNode: Extracts key points and creates table of contents

- SummarizerWriterNode: Generates detailed summary from main points

- UserFeedbackNode: Human review and feedback collection

- SummarizerRewriterNode: Refines summary based on feedback

- Router: Routes to save or continue refinement

Flow:

- Sequential processing: Main Points → Summary Writer → User Feedback

- Feedback loop: Router directs to rewriter or completion

- Rewriter loops back to user feedback for iterative improvement

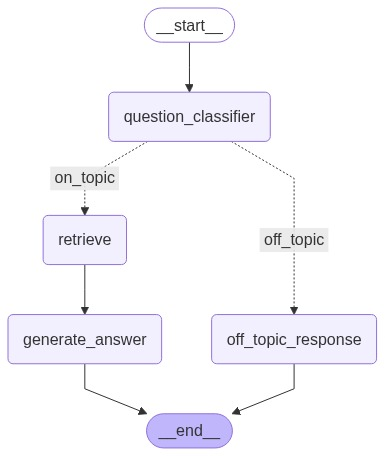

3. Question Answering Graph

The Q&A agent implements intelligent topic classification and retrieval:

Nodes:

- QuestionClassifier: Analyzes question relevance and retrieves context

- OnTopicRouter: Routes based on question relevance to content

- Retrieve: Fetches relevant document chunks using semantic search

- GenerateAnswer: Creates contextual answers from retrieved content

- OffTopicResponse: Handles questions outside the content scope

Flow:

- Question classification with embedding-based relevance scoring

- Conditional routing: on-topic questions go through retrieval pipeline

- Off-topic questions receive appropriate redirect responses

- No loops - single-pass processing for efficiency

Key Architectural Features

Human-in-the-Loop Design:

- Strategic interrupt points for human feedback

- Continuous refinement loops in generation and summarization

- User control over when to complete or continue refining

Reflection Agent Architecture:

- Feedback incorporation mechanisms

- History tracking for context preservation

- Iterative improvement through dedicated refiner/rewriter nodes

Async API Architecture

CourseTA implements a comprehensive async API architecture that supports both synchronous and streaming responses, providing real-time user experiences and efficient resource utilization.

API Documentation

File Processing APIs

1. Upload File

Upload PDF documents or audio/video files for text extraction and processing.

URL: /upload_file/

Method: POST

Content-Type: multipart/form-data

Request Body:

| |

Response:

| |

Supported Formats:

- PDF:

.pdffiles - Audio:

.mp3,.wavformats - Video:

.mp4,.avi,.mov,.mkv,.flvformats

2. Get Extracted Text

Retrieve the processed text content for a given asset ID.

URL: /get_extracted_text/{asset_id}

Method: GET

Path Parameters:

asset_id: The unique identifier returned from file upload

Response:

| |

Question Generation APIs

3. Start Question Generation Session

Generate questions from uploaded content with human-in-the-loop feedback.

URL: /api/v1/graph/qg/start_session

Method: POST

Request Body:

| |

Parameters:

asset_id: Asset ID from file upload (required)question_type: Question type - “T/F” for True/False or “MCQ” for Multiple Choice (required)

Response:

| |

4. Provide Question Feedback

Provide feedback to refine generated questions or save the current question.

URL: /api/v1/graph/qg/provide_feedback

Method: POST

Request Body:

| |

Parameters:

thread_id: Session ID from start_session (required)feedback: Feedback text, “auto” for automatic refinement, or “save” to finish (required)

Response:

| |

Content Summarization APIs

5. Start Summarization Session (Streaming)

Generate content summaries with real-time streaming output.

URL: /api/v1/graph/summarizer/start_session_streaming

Method: POST

Content-Type: text/event-stream

Request Body:

| |

Parameters:

asset_id: Asset ID from file upload (required)

Streaming Response Events:

| |

6. Provide Summarization Feedback (Streaming)

Refine summaries based on user feedback with streaming responses.

URL: /api/v1/graph/summarizer/provide_feedback_streaming

Method: POST

Content-Type: text/event-stream

Request Body:

| |

Parameters:

thread_id: Session ID from start_session_streaming (required)feedback: Feedback text or “save” to finish (required)

Streaming Response Events:

| |

Question Answering APIs

7. Start Q&A Session (Streaming)

Answer questions based on uploaded content with streaming responses.

URL: /api/v1/graph/qa/start_session_stream

Method: POST

Content-Type: text/event-stream

Request Body:

| |

Parameters:

asset_id: Asset ID from file upload (required)initial_question: The first question to ask about the content (required)

Streaming Response Events:

| |

8. Continue Q&A Conversation (Streaming)

Continue an existing Q&A session with follow-up questions.

URL: /api/v1/graph/qa/continue_conversation_stream

Method: POST

Content-Type: text/event-stream

Request Body:

| |

Streaming Response Events:

| |

Headers for Streaming APIs

Required Headers:

| |